- Twig Dev Notes

- Posts

- AI Dev Notes: Nov 10, 2025

AI Dev Notes: Nov 10, 2025

Explore AI, LLM, RAG, Agent, MCP Techniques with the Twig dev team

Highlights21 RAG Strategies - over 300 DownloadsI am excited to share the 21 RAG Strategies Ebook was downloaded over 300 times over this weekend. It great to see how important RAG is in todays enterprise AI. Remember to share what you liked or disliked about it - Chandan |  n |

It’ll be 10 times bigger than the Industrial Revolution — and maybe 10 times faster.

Engineering Notes

Do you chunk?

Why Chunking Matters in RAG

In Retrieval-Augmented Generation (RAG), chunking is the quiet but critical step that determines how effectively your model understands and retrieves information. When large documents are split into smaller, semantically coherent “chunks,” the retrieval system can locate and surface the most relevant pieces of context instead of feeding the model entire, noisy datasets. Poorly chunked data often leads to irrelevant or incomplete answers because the model retrieves text that’s either too broad or disconnected from the query.

The Balance Between Granularity and Context

Choosing the right chunk size is a trade-off between precision and completeness. Smaller chunks improve retrieval accuracy but risk losing context, while larger ones preserve meaning but can confuse the retriever. Smart chunking strategies—like overlapping windows or adaptive chunking based on sentence boundaries, headings, or semantic similarity—help maintain coherence without sacrificing precision. The goal is to ensure that each chunk answers a focused question on its own while still fitting into the broader narrative of the document.

Modern Chunking Strategies

Advanced RAG pipelines now use hybrid and dynamic chunking methods. Sentence-level chunking works well for FAQs and structured data, while semantic chunking powered by embeddings identifies natural topic shifts in unstructured text. Some systems even employ hierarchical chunking, creating multi-level representations—from fine-grained facts to higher-level summaries—allowing retrieval at the right level of detail for each query. In short, chunking isn’t just preprocessing—it’s the foundation for building accurate, efficient, and trustworthy RAG systems.

About Twig

Ship RAG projects faster with Twig

Twig is an AI engineering platform built for teams shipping Retrieval-Augmented Generation (RAG) and agentic systems to production. It automates the hardest parts of enterprise AI—data ingestion, chunking, vectorization, and evaluation—so developers can focus on building intelligent copilots and support automation. Trusted by fast-growing startups and enterprise teams alike, Twig helps you go from prototype to production up to 80% faster.

Auto-ingestion: Instantly connects and cleans data from sources.

Smart chunking: Optimizes context size for precise retrieval.

Auto-indexing: Embeds and stores data at scale.

Self-evaluation: Monitors and improves answer quality.

Fast deploy: Ships RAG pipelines to production 80% faster.

/

/

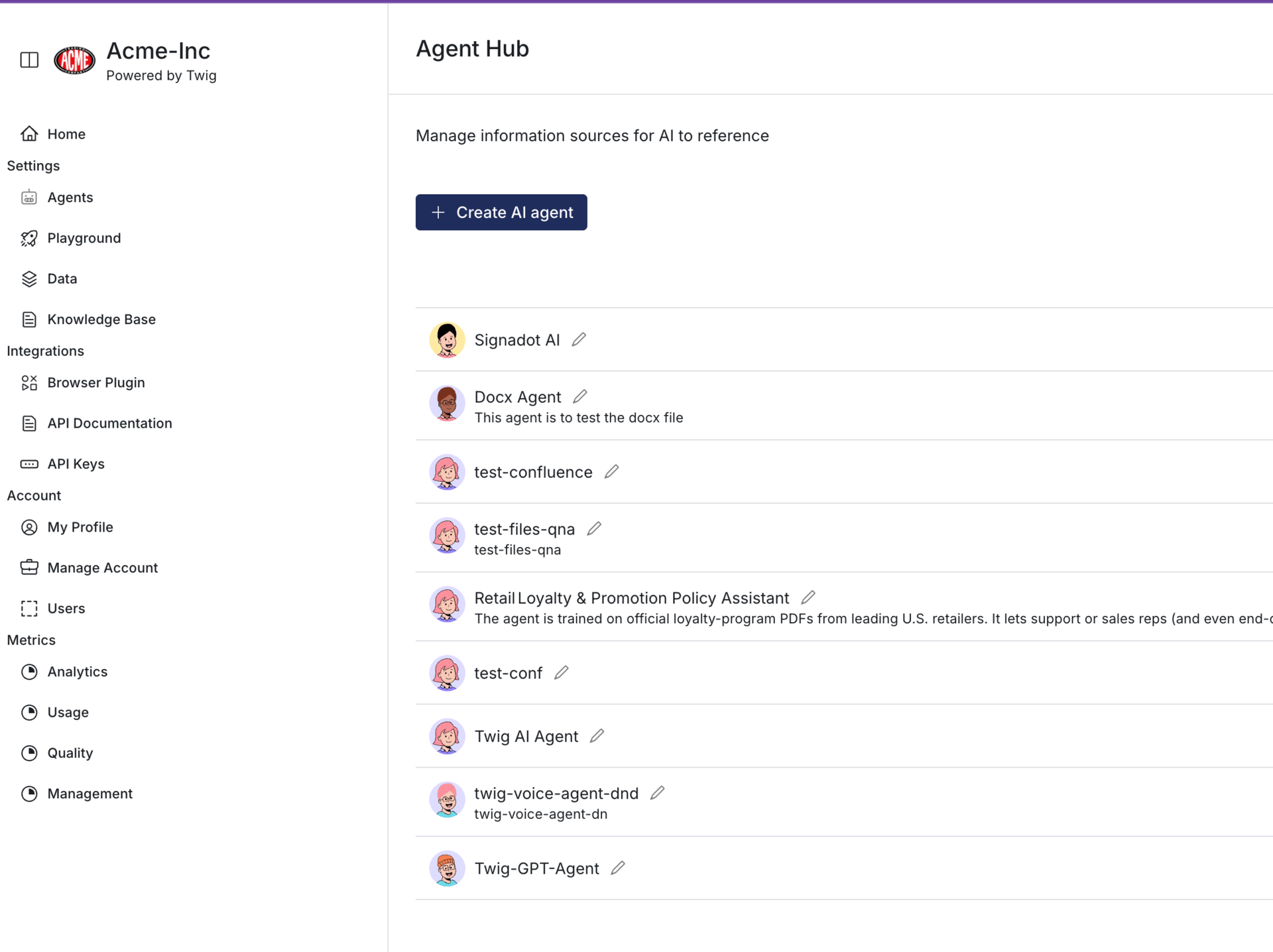

Agent Builder

Build AI Agents in Minutes, Not Months

Twig’s Agent Builder makes creating intelligent, task-specific AI agents as simple as assembling building blocks. Instead of stitching together APIs, prompts, and retrieval systems manually, teams can define an agent’s role, connect it to knowledge sources, and deploy it instantly—all from one unified interface. The Agent Builder handles context management, memory, and reasoning flow automatically, so developers can focus on outcomes, not infrastructure.

From Prototype to Production Effortlessly

Each agent built on Twig is powered by enterprise-grade RAG and evaluation pipelines, ensuring reliable, context-aware responses across real business data. Teams can customize behaviors, track performance, and iterate without writing extra code. Whether it’s a support bot, knowledge assistant, or internal co-pilot, Twig turns AI agent creation into a fast, repeatable process—making enterprise-ready automation accessible to every developer.